NEW! Download Gartner® Report: Rethink Enterprise Search to Power AI Assistants and Agents. Get Started.

NEW! Download Gartner® Report: Rethink Enterprise Search to Power AI Assistants and Agents. Get Started.

NEW! Download Gartner® Report: Rethink Enterprise Search to Power AI Assistants and Agents. Get Started.

SearchAI Private LLM

SearchAI Private LLM

SearchAI Private LLM

High-Performance LLM Inference with Purely CPUs

High-Performance LLM Inference with Purely CPUs

High-Performance LLM Inference with Purely CPUs

Enable AI Assistants easily and securely on your organization’s private data.

Enable AI Assistants easily and securely on your organization’s private data.

Enable AI Assistants easily and securely on your organization’s private data.

Schedule a Private Demo

Schedule a Private Demo

Fixed Cost

Fixed Cost

Significantly reduce costs and complexity; our solutions easily integrate with existing hardware and virtual servers.

Highly Scalable

Highly Scalable

Deploy AI models that are scalable across your organization and unlock the full potential of your data.

Secure & Private

Secure & Private

Keep your model, your inference requests and your data sets within the security domain of your organization.

Optimized for RAG

Optimized for RAG

Set up AI assistants and conversational chatbots using your own data directly on the SearchAI platform.

What is RAG?

RAG stands for Retrieval-Augmented Generation. It is an approach that combines the capabilities of large language models (LLMs) with information retrieval from external data sources. Here’s how it works:

01

01

01

Asking Questions

Asking Questions

Asking Questions

A user starts a conversation with the chatbot through a question. The user question is converted into a semantic retrieval query.

A user starts a conversation with the chatbot through a question. The user question is converted into a semantic retrieval query.

A user starts a conversation with the chatbot through a question. The user question is converted into a semantic retrieval query.

02

02

02

Fetching Answers

Fetching Answers

Fetching Answers

The retrieval component searches through a corpus of documents or data sources to find the most relevant information.

The retrieval component searches through a corpus of documents or data sources to find the most relevant information.

The retrieval component searches through a corpus of documents or data sources to find the most relevant information.

03

03

03

Creating Context

Creating Context

Creating Context

The retrieved information, along with the user question, is passed to a large language model (LLM) for processing.

The retrieved information, along with the user question, is passed to a large language model (LLM) for processing.

The retrieved information, along with the user question, is passed to a large language model (LLM) for processing.

04

04

04

Delivering Results

Delivering Results

Delivering Results

The language model generates a final answer by synthesizing and reasoning over the retrieved information.

The language model generates a final answer by synthesizing and reasoning over the retrieved information.

The language model generates a final answer by synthesizing and reasoning over the retrieved information.

Schedule Private Demo

What is RAG?

RAG stands for Retrieval-Augmented Generation. It is an approach that combines the capabilities of large language models (LLMs) with information retrieval from external data sources. Here’s how it works:

What is RAG?

RAG stands for Retrieval-Augmented Generation. It is an approach that combines the capabilities of large language models (LLMs) with information retrieval from external data sources. Here’s how it works:

Schedule Private Demo

This allows RAG models to provide more factual and up-to-date responses by leveraging external knowledge sources, rather than being limited to what’s contained in the language model’s training data.

This allows RAG models to provide more factual and up-to-date responses by leveraging external knowledge sources, rather than being limited to what’s contained in the language model’s training data.

This allows RAG models to provide more factual and up-to-date responses by leveraging external knowledge sources, rather than being limited to what’s contained in the language model’s training data.

Not sure what's best?

Schedule a private consultation to see how SearchAI will make a difference across your enterprise.

Ask Us Questions

Setting Up RAG ChatBots

Setting Up RAG ChatBots efficiently and Securely

Setting Up RAG ChatBots efficiently and securely

.

SearchAI provides a single platform for deploying RAG chatbots on your data, making it easy to leverage the power of RAG without compromising data privacy or security. Set up in a few simple steps.

Data Ingestion

Import your proprietary data sources or document corpora into the SearchAI platform. This could include manuals, legal documents, knowledge bases, etc.

ChatBot Interface Setup

Setup your chatbot for the private data using SearchAI.

Index Creation

Build efficient search indexes over your data to enable fast retrieval.

Query Handling

When a user query comes in, SearchAI retrieves relevant information and passes it to your LLM to generate a final response.

Model Integration

Connect and use SearchAI Private LLM.

Testing & Refinement

Evaluate your chatbot’s performance, fine-tune prompts and make adjustments to improve accuracy.

Deployment

Once finalized, deploy your private RAG chatbot on your own hardware or SearchAI’s secure cloud infrastructure.

Get Started with RAG

Data Ingestion

Data Ingestion

Import your proprietary data sources or document corpora into the SearchAI platform. This could include manuals, legal documents, knowledge bases, etc.

Import your proprietary data sources or document corpora into the SearchAI platform. This could include manuals, legal documents, knowledge bases, etc.

ChatBot Interface Setup

ChatBot Interface Setup

Setup your chatbot for the private data using SearchAI.

Setup your chatbot for the private data using SearchAI.

Index Creation

Index Creation

Build efficient search indexes over your data to enable fast retrieval.

Build efficient search indexes over your data to enable fast retrieval.

Query Handling

Query Handling

When a user query comes in, SearchAI retrieves relevant information and passes it to your LLM to generate a final response.

When a user query comes in, SearchAI retrieves relevant information and passes it to your LLM to generate a final response.

Model Integration

Model Integration

Connect and use SearchAI Private LLM.

Connect and use SearchAI Private LLM.

Testing & Refinement

Testing & Refinement

Evaluate your chatbot’s performance, fine-tune prompts and make adjustments to improve accuracy.

Evaluate your chatbot’s performance, fine-tune prompts and make adjustments to improve accuracy.

Deployment

Deployment

Once finalized, deploy your private RAG chatbot on your own hardware or SearchAI’s secure cloud infrastructure.

Once finalized, deploy your private RAG chatbot on your own hardware or SearchAI’s secure cloud infrastructure.

Get Started with RAG

Get Started with RAG

The Benefits of RAG

There are several key benefits to using a RAG approach:

Schedule Private Demo

The Benefits of RAG

The Benefits of RAG

There are several key benefits to using a RAG approach:

There are several key benefits to using a RAG approach:

Schedule Private Demo

Improved Accuracy & Relevance

Access to Up-to-Date Information

Domain Knowledge Expansion

No Hallucination

Enhanced Reasoning Capabilities

By retrieving information from pre-defined authoritative sources, RAG can provide more factual and reliable responses than LLMs alone.

Improved Accuracy & Relevance

Access to Up-to-Date Information

Domain Knowledge Expansion

No Hallucination

Enhanced Reasoning Capabilities

By retrieving information from pre-defined authoritative sources, RAG can provide more factual and reliable responses than LLMs alone.

Improved Accuracy & Relevance

By retrieving information from pre-defined authoritative sources, RAG can provide more factual and reliable responses than LLMs alone.

Access to Up-to-Date Information

Domain Knowledge Expansion

No Hallucination

Enhanced Reasoning Capabilities

Improved Accuracy & Relevance

By retrieving information from pre-defined authoritative sources, RAG can provide more factual and reliable responses than LLMs alone.

Access to Up-to-Date Information

Domain Knowledge Expansion

No Hallucination

Enhanced Reasoning Capabilities

Schedule Private Demo

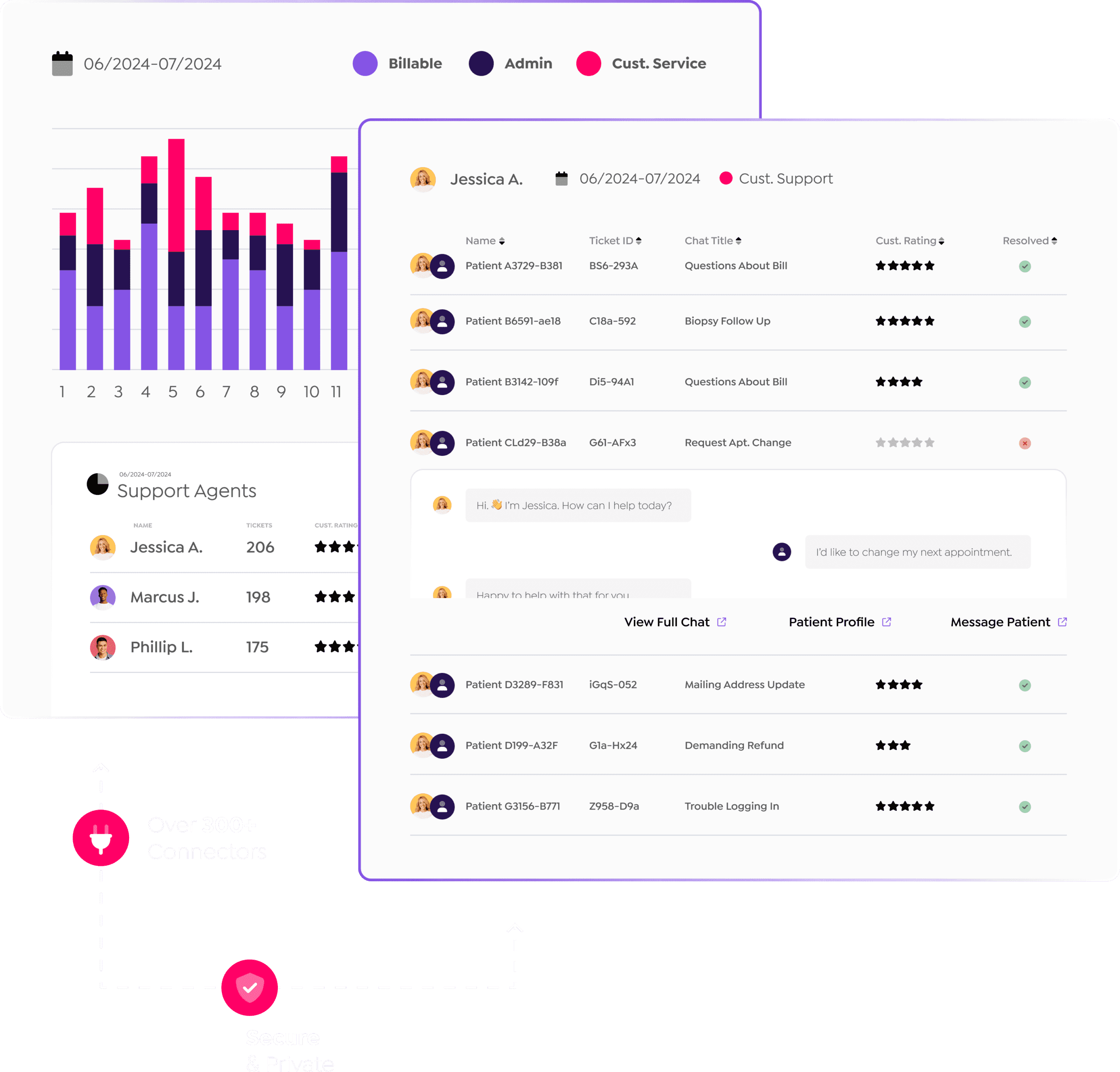

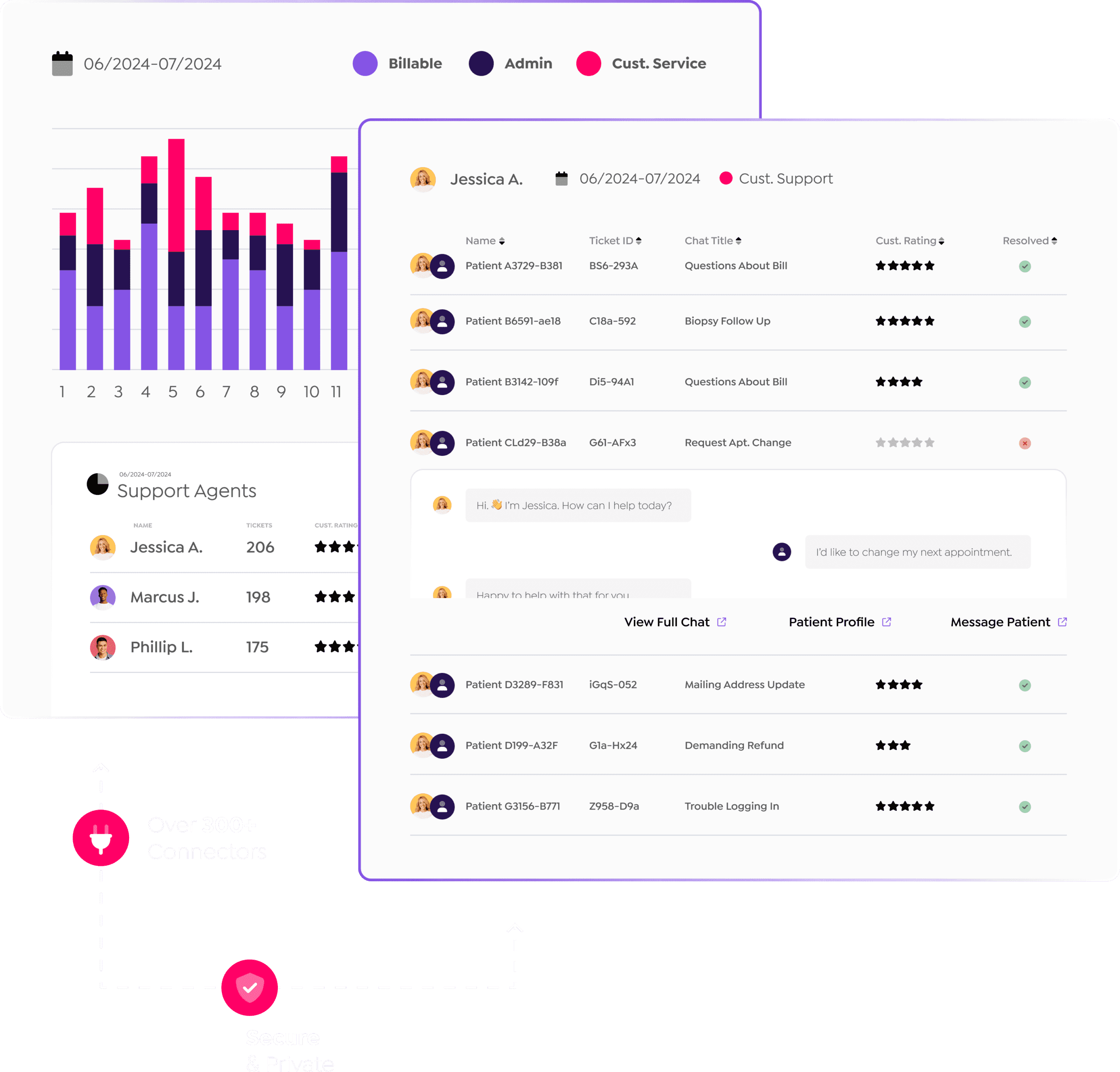

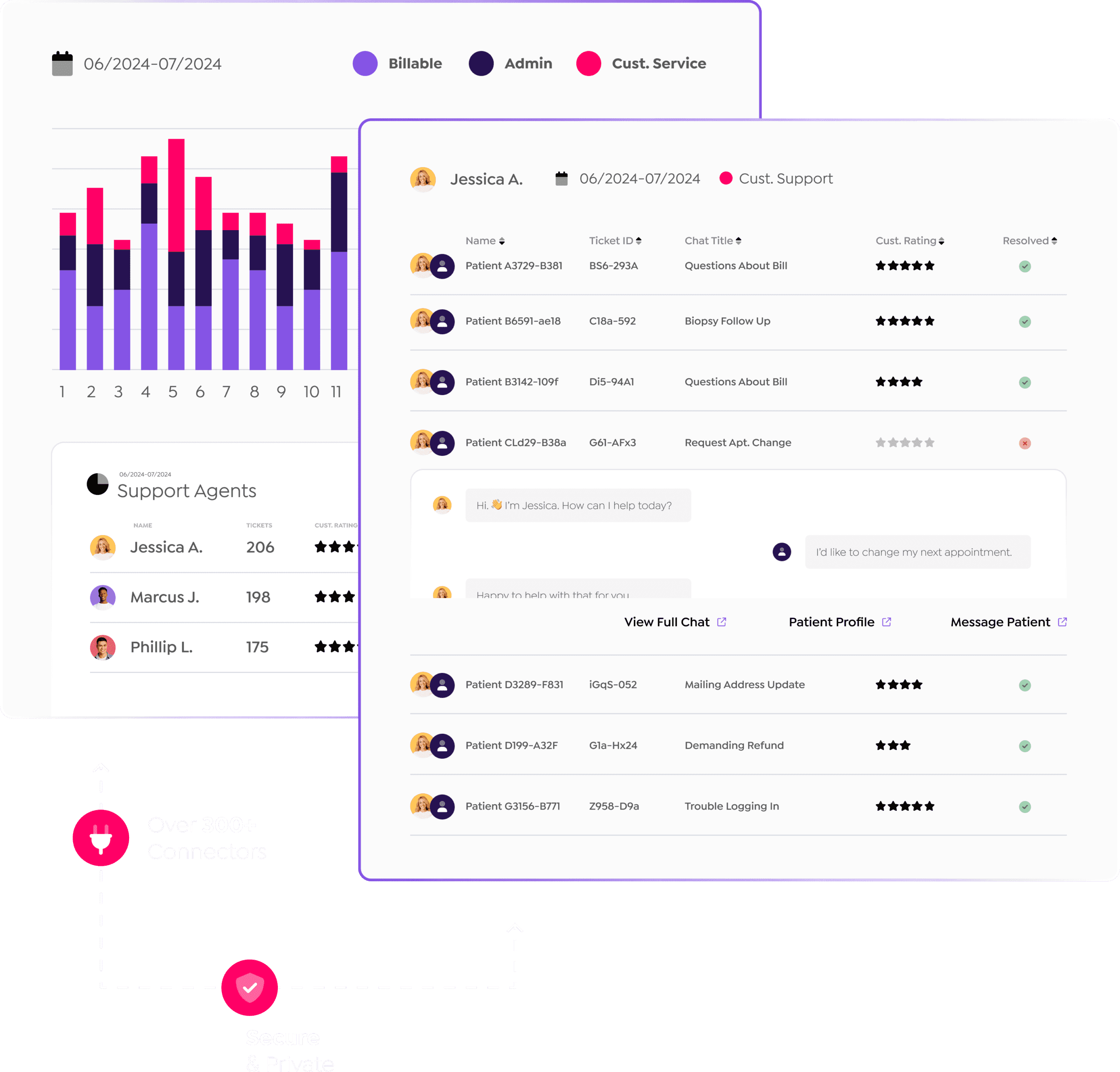

Modernize Customer Service with AI Assistants

Implementing AI assistants on your website or customer portal can revolutionize your customer service operations, providing 24/7 support, instant responses, and personalized experiences.

How to Calculate the Total Cost of RAG-Based Solutions

Calculating a 3-5 year total cost of ownership is crucial for RAG solutions. Generative AI requires strategic planning to manage costs and ensure ROI, including aspects like talent, infrastructure, software, data and support.

Elevate Your Adobe Experience Manager (AEM) Site with AI Chatbots

AEM sites can now incorporate Generative AI-based chatbots to meet customer demands for quick access to sales, customer service or knowledge management information.

Modernize Customer Service with AI Assistants

Implementing AI assistants on your website or customer portal can revolutionize your customer service operations, providing 24/7 support, instant responses, and personalized experiences.

How to Calculate the Total Cost of RAG-Based Solutions

Calculating a 3-5 year total cost of ownership is crucial for RAG solutions. Generative AI requires strategic planning to manage costs and ensure ROI, including aspects like talent, infrastructure, software, data and support.

Elevate Your Adobe Experience Manager (AEM) Site with AI Chatbots

AEM sites can now incorporate Generative AI-based chatbots to meet customer demands for quick access to sales, customer service or knowledge management information.

Modernize Customer Service with AI Assistants

Implementing AI assistants on your website or customer portal can revolutionize your customer service operations, providing 24/7 support, instant responses, and personalized experiences.

How to Calculate the Total Cost of RAG-Based Solutions

Calculating a 3-5 year total cost of ownership is crucial for RAG solutions. Generative AI requires strategic planning to manage costs and ensure ROI, including aspects like talent, infrastructure, software, data and support.

Elevate Your Adobe Experience Manager (AEM) Site with AI Chatbots

AEM sites can now incorporate Generative AI-based chatbots to meet customer demands for quick access to sales, customer service or knowledge management information.

Use Cases for SearchAI Private LLM

Use Cases for SearchAI Private LLM

The benefits of AI assistants range from increased customer experience and faster service ticket responses to higher employee output and increased organizational knowledge. How will you leverage SearchAI to grow your organization?

The benefits of AI assistants range from increased customer experience and faster service ticket responses to higher employee output and increased organizational knowledge. How will you leverage SearchAI to grow your organization?

Government

Government

Government

Maintaining compliance clarity.

Maintaining compliance clarity.

Maintaining compliance clarity.

A government agency launched a chatbot for tax professionals to ask questions on complex laws, rulings and decisions.

A government agency launched a chatbot for tax professionals to ask questions on complex laws, rulings and decisions.

A government agency launched a chatbot for tax professionals to ask questions on complex laws, rulings and decisions.

Get Started with RAG

Get Started with RAG

Get Started with RAG

Manufacturing

Manufacturing

Manufacturing

Aligning organizational knowledge.

Aligning organizational knowledge.

Aligning organizational knowledge.

A manufacturing facility needs to find information from product manuals in an expedited manner.

A manufacturing facility needs to find information from product manuals in an expedited manner.

A manufacturing facility needs to find information from product manuals in an expedited manner.

Finance

Finance

Finance

Expediting customer support responses.

Expediting customer support responses.

Expediting customer support responses.

A finance department can query information about purchase orders and invoices for efficient customer support.

A finance department can query information about purchase orders and invoices for efficient customer support.

A finance department can query information about purchase orders and invoices for efficient customer support.

Schedule Strategy Session

Schedule Strategy Session

See For Yourself

See For Yourself

See For Yourself

Discover the strategic benefits of RAG — get started with a private, customized demo with your own data.

Discover the strategic benefits of RAG — get started with a private, customized demo with your own data.

Discover the strategic benefits of RAG — get started with a private, customized demo with your own data.

Schedule A Private Demo

Schedule A Private Demo

Enhance your users’ digital experience.

We build AI-driven software to help organizations leverage their unstructured and structured data for operational success.

4870 Sadler Road, Suite 300, Glen Allen, VA 23060 sales@searchblox.com | (866) 933-3626

Still learning about AI? See our comprehensive Enterprise Search RAG 101, ChatBot 101 and AI Agents 101 guides.

©2025 SearchBlox Software, Inc. All rights reserved.

Enhance your users’ digital experience.

We build AI-driven software to help organizations leverage their unstructured and structured data for operational success.

4870 Sadler Road, Suite 300, Glen Allen, VA 23060 sales@searchblox.com | (866) 933-3626

Still learning about AI? See our comprehensive Enterprise Search RAG 101, ChatBot 101 and AI Agents 101 guides.

©2025 SearchBlox Software, Inc. All rights reserved.

Enhance your users’ digital experience.

We build AI-driven software to help organizations leverage their unstructured and structured data for operational success.

4870 Sadler Road, Suite 300, Glen Allen, VA 23060 sales@searchblox.com | (866) 933-3626

Still learning about AI? See our comprehensive Enterprise Search RAG 101, ChatBot 101 and AI Agents 101 guides.

©2024 SearchBlox Software, Inc. All rights reserved.